Media NAS with Xeon D-1537

29 Apr 2016I’m planning to upgrade my current file server, to reduce power consumption. I’m doing this by swapping to a Xeon D board. While I’m at it, I also want to use my disks more efficiently; I’m currently using 3ware 9750 hardware RAID5, with mulitple containers, but I’m wasting space across different containers as the space isn’t shared/pooled. ZFS’ LVM-like pooling is interesting, as is Windows Server Storage Pool’s Simple configuration. (Storage Pool’s Parity is extremely slow for single nodes; if I needed an entire rack of storage, it might scale better.)

I did some unscientific benchmark runs of Windows Server 2016 in KVM virtualization, backed by ZFS storage, then I tested 2016’s Simple Storage Pools. I collected some data primarily around sequential read/writes, as I’m primarily storing large > 4GB files.

Usage/Goals

- Store large files and also VHDs for VMs

- Make files available via SMB 3.0 for Hyper-V and Kodi on Windows 10 HTPCs

- Low power usage

- Consistent performance as I read/write large sequential files from 4-5 hosts concurrently

- Able to easily add a set of disks, expand storage

- Add 4-6 disks at a time to expand storage, able to swap existing disks as drives become cheaper

- Able to tolerate at least 1 disk failure per set of disks I add

Storage Pools

I’m creating a few RAID5 vdevs with my hardware RAID card. This gives me 1 device resiliency per RAID5 vdev; I can lose up to 1 drive per RAID5 vdev and not lose any data. I’m then creating my Simple Storage Pool ontop of these hardware vdevs, with 2 data columns. This allows me to pool the storage across the hardware RAID5 containers, presenting 1 giant partition to the OS. This means there is NO tolerance from Storage Pools, but data is striped across 2 hardware RAID vdevs for greater performance, but there is a minimum of 2 RAID5 containers.

WARNING: If I lose any 1 of my hardware RAID5 sets (i.e. lose more than 1 drive per RAID5 set), I lose the entire pool and all files in the pool across all RAID containers in the pool. If your drives are suspect, you should probably use RAID6 to allow for up to 2 drive failures. Ensure you run hardware RAID patrol/scan/repair every week. And back up data you care about to another system.

Initializing the pool

- Create a few hardware RAID5 containers, enable CacheVault, and expose them to the OS.

- Initialize the disks, GPT, so that they are visible to Storage Pools.

- Add the RAID sets into the Pool

- Create a new virtual disk

New-VirtualDisk -FriendlyName Media -StoragePoolFriendlyName Media -NumberOfColumns 2 -ResiliencySettingName Simple -Size 20TB -ProvisioningType Thin - Partition and format! Here’s the performance I get:

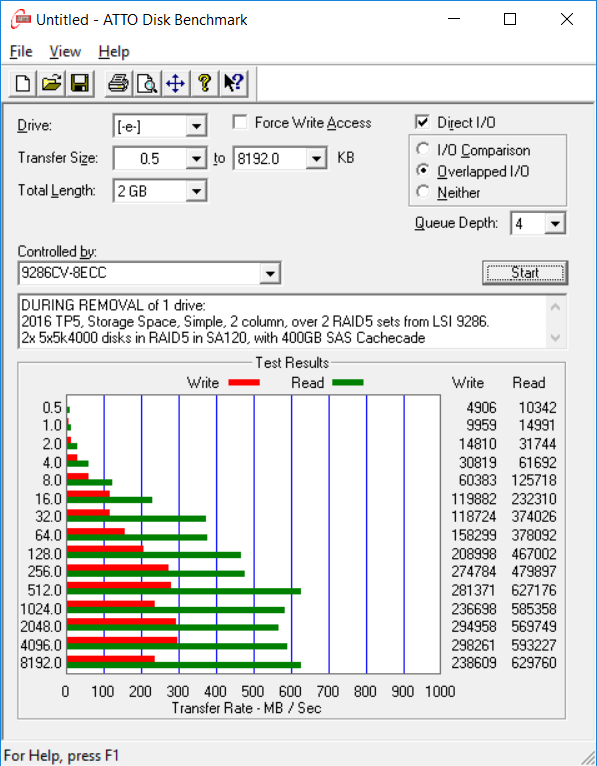

Removing a RAID set from the pool

Since there are 2 columns to this pool, removing a RAID set requires enough space on 2 other RAID sets in the pool.

- Identify the disk. Note the UniqueID of the disk you’re trying to remove.

Get-PhysicalDisk | fl FriendlyName, UniqueID, Size - Mark the disk you’re planning on removing.

Set-PhysicalDisk -UniqueID XXXXXXXXXX1C5FF01EB00203XXXXXXXX -Usage Retired - Find the affected Virtual-Disk

Get-VirtualDisk - Repair the VirtualDisk

Repair-VirtualDisk -FriendlyName MyDisk - Wait until the repair is done

Get-StorageJob - Rebalance the pool

Optimize-StoragePool - Remove the disk from the pool

Remove-PhysicalDisk -UniqueID XXXXXXXXXX1C5FF01EB00203XXXXXXXX

Benchmarking different configurations

- Base hardware

- Xeon D-1537 with 64GB of RAM, with 1Gbps and 10Gbps SFP+ network connections

- Testing with 4x 4TB HGST 5K4000 coolspin drives, and 3x 2TB Samsung HD204UI drives

- 400GB Toshiba PX02SMF040 SAS SSD for cache

- Disks in Rackable SE3016 and Lenovo SA120

- Windows Server 2016 is using ReFS for the storage partition

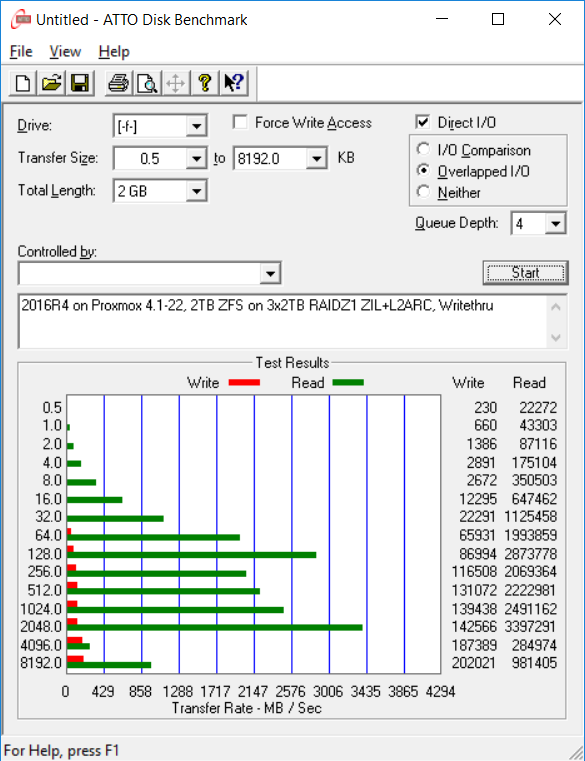

- Config 1: Windows Server 2016 TP4 on KVM, on Proxmox 4.1. 3x2TB Samsung drives in RAIDZ1 with the 400GB SSD for 50/50 ZIL/L2ARC

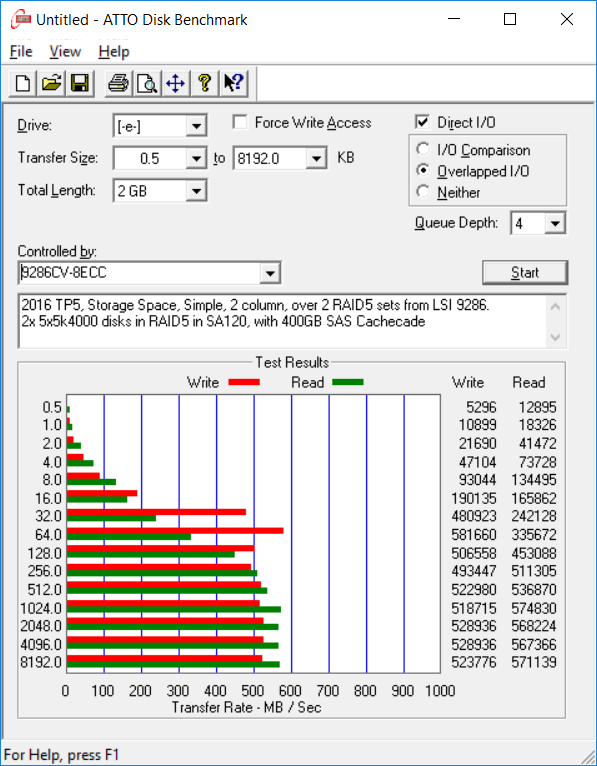

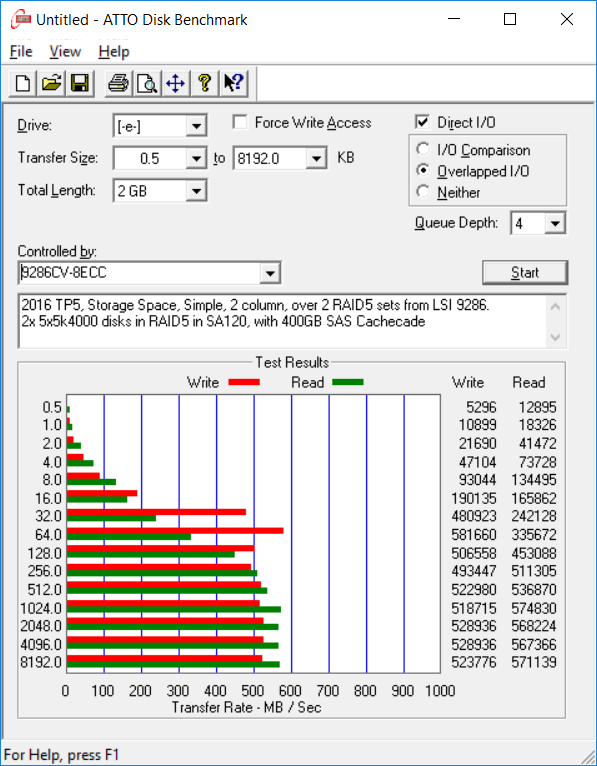

- Config 2: Windows Server 2016 TP5 on bare metal, LSI 9268CV-8eCC. Disks in hardware RAID5, cachevault enabled, pooled with a Simple Storage Space with 2 columns

The virtualization overhead will likely disadvantage the ZFS configuration significantly, but the RAM caching should be an order of magnitude faster than hardware RAID.

ATTO performance

- Config 1

- Write performance varied from around 100-200MB/s here. Relatively disappointing.

- Config 2

- Read and write performance was consistent, but slower than Config 1.

Diskspd performance

ZFS’ heavy RAM caching is likely to perform well for filesizes < 60GB

2GB random concurrent writes of 64KB blocks

diskspd -c2G -w -b64K -F8 -r -o32 -d10 -h testfile.dat

Total IO

| 8 threads | bytes | I/Os | MB/s | I/O per s | file |

|---|---|---|---|---|---|

| Config1 | 35203448832 | 537162 | 3242.57 | 51881.09 | 2GB |

| Config2 | 2291793920 | 34970 | 218.54 | 3496.60 | 2GB |

2GB sequential concurrent writes of 64KB blocks

diskspd -c2G -w -b64K -F8 -T1b -s8b -o32 -d10 -h testfile.dat

Total IO

| 8 threads | bytes | I/Os | MB/s | I/O per s | file |

|---|---|---|---|---|---|

| Config1 | 54970941440 | 838790 | 3193.67 | 51098.78 | 2GB |

| Config2 | 1295515648 | 19768 | 123.43 | 1974.92 | 2GB |

2GB sequential serial writes of 64KB blocks

diskspd -c2G -w -b64K -o1 -d10 -h testfile.dat

Total IO

| thread | bytes | I/Os | MB/s | I/O per s | file |

|---|---|---|---|---|---|

| Config1 | 6997934080 | 106780 | 667.37 | 10677.96 | 2GB |

| Config2 | 1118633984 | 17069 | 106.62 | 1706.00 | 2GB |

Now for some larger files, more like what I’m actually looking to store.

64GB sequential I/O writes

diskspd -w100 -d600 -W300 -b512K -t1 -o4 -h -L -Z1M -c64G testfile.dat 1

Total IO

| thread | bytes | I/Os | MB/s | I/O per s | AvgLat | LatStdDev | file |

|---|---|---|---|---|---|---|---|

| Config1 | 41702391808 | 79541 | 66.28 | 132.57 | 30.168 | 46.099 | 64GB |

| Config2 | 174029012992 | 331934 | 276.61 | 553.21 | 7.228 | 19.125 | 64GB |

50GB reads, 32 threads

diskspd -b4K -d60 -h -o128 -t32 -si -c50G testfile.dat 2

Total IO

| 32 threads | bytes | I/Os | MB/s | I/O per s | file |

|---|---|---|---|---|---|

| Config1 | 13471064064 | 3288834 | 214.11 | 54813.08 | 50GB |

| Config2 | 6388801536 | 1559766 | 101.53 | 25991.85 | 50GB |

50GB reads, Config2 only

| Threads | bytes | I/Os | MB/s | I/O per s |

|---|---|---|---|---|

| 1 | 14566580224 | 3556294 | 231.51 | 59265.62 |

| 2 | 11763695616 | 2871996 | 186.98 | 47866.67 |

| 3 | 15360860160 | 3750210 | 244.13 | 62496.02 |

| 4 | 11080908800 | 2705300 | 176.09 | 45077.76 |

| 5 | 14989860864 | 3659634 | 238.23 | 60986.97 |

| 6 | 15076560896 | 3680801 | 239.58 | 61331.65 |

SQL OLTP-type workloads

diskspd -b8K -d2 -h -L -o4 -t4 -r -w20 -Z1G -c50G testfile.dat 3

Total IO

| 4 threads | bytes | I/Os | MB/s | I/O per s | AvgLat | LatStdDev | file |

|---|---|---|---|---|---|---|---|

| Config1 | 126582784 | 15452 | 59.91 | 7667.86 | 2.082 | 5.453 | 50GB |

| Config2 | 30842880 | 3765 | 14.71 | 1882.47 | 8.524 | 17.877 | 50GB |

Read IO

| 4 threads | bytes | I/Os | MB/s | I/O per s | AvgLat | LatStdDev | file |

|---|---|---|---|---|---|---|---|

| Config1 | 101990400 | 12450 | 48.27 | 6178.16 | 0.133 | 0.222 | 50GB |

| Config2 | 24829952 | 3031 | 11.84 | 1515.48 | 9.952 | 18.989 | 50GB |

Write IO

| 4 threads | bytes | I/Os | MB/s | I/O per s | AvgLat | LatStdDev | file |

|---|---|---|---|---|---|---|---|

| Config1 | 24592384 | 3002 | 11.64 | 1489.70 | 10.166 | 8.469 | 50GB |

| Config2 | 6012928 | 734 | 2.87 | 366.99 | 2.628 | 10.347 | 50GB |

Config 1 Response time

| %-ile | Read (ms) | Write (ms) | Total (ms) |

|---|---|---|---|

| min | 0.043 | 1.919 | 0.043 |

| 25th | 0.093 | 3.876 | 0.097 |

| 50th | 0.115 | 6.803 | 0.131 |

| 75th | 0.153 | 15.196 | 0.205 |

| 90th | 0.192 | 22.814 | 6.321 |

| 95th | 0.216 | 28.896 | 15.087 |

| 99th | 0.280 | 34.100 | 28.732 |

| 3-nines | 0.882 | 35.121 | 34.550 |

| 4-nines | 12.319 | 35.213 | 35.176 |

| 5-nines | 19.003 | 35.213 | 35.213 |

| 6-nines | 19.003 | 35.213 | 35.213 |

| 7-nines | 19.003 | 35.213 | 35.213 |

| 8-nines | 19.003 | 35.213 | 35.213 |

| max | 19.003 | 35.213 | 35.213 |

Config 2 Response time

| %-ile | Read (ms) | Write (ms) | Total (ms) |

|---|---|---|---|

| min | 0.097 | 0.087 | 0.087 |

| 25th | 0.208 | 0.111 | 0.190 |

| 50th | 0.242 | 0.130 | 0.225 |

| 75th | 11.344 | 0.153 | 8.870 |

| 90th | 34.800 | 0.226 | 31.081 |

| 95th | 54.517 | 28.262 | 50.875 |

| 99th | 81.996 | 53.334 | 78.387 |

| 3-nines | 122.808 | 79.380 | 122.808 |

| 4-nines | 149.823 | 79.380 | 149.823 |

| 5-nines | 149.823 | 79.380 | 149.823 |

| 6-nines | 149.823 | 79.380 | 149.823 |

| 7-nines | 149.823 | 79.380 | 149.823 |

| 8-nines | 149.823 | 79.380 | 149.823 |

| max | 149.823 | 79.380 | 149.823 |

-

http://www.heraflux.com/knowledge/utilities/diskspd-batch/ ↩

-

http://www.davidklee.net/2015/04/01/storage-benchmarking-with-diskspd/ ↩