ZFS on Xeon D-1537 with Proxmox

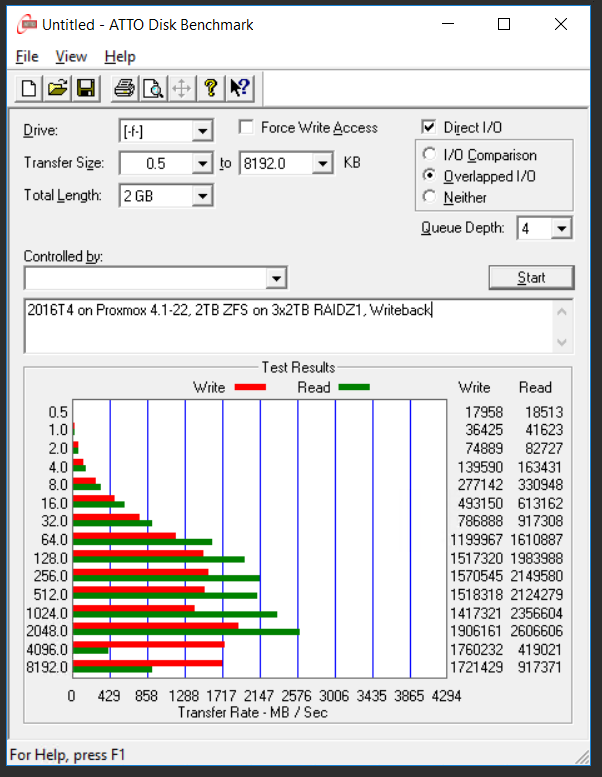

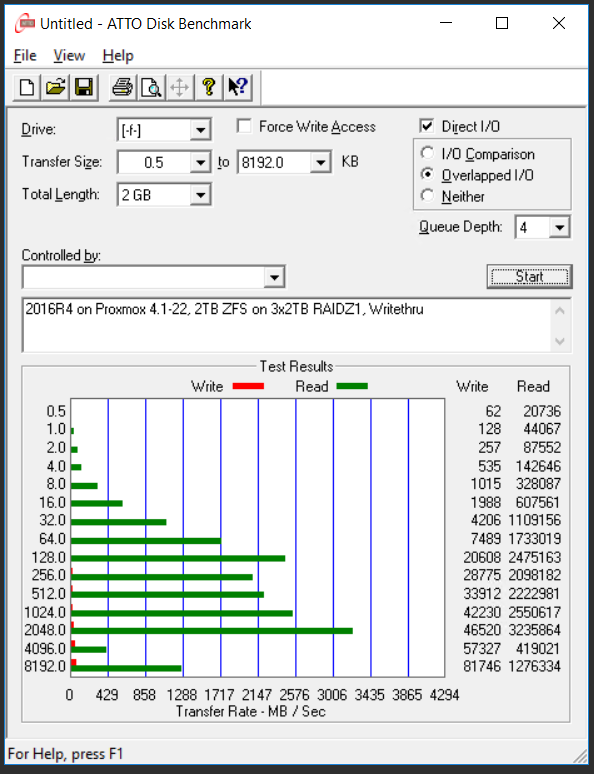

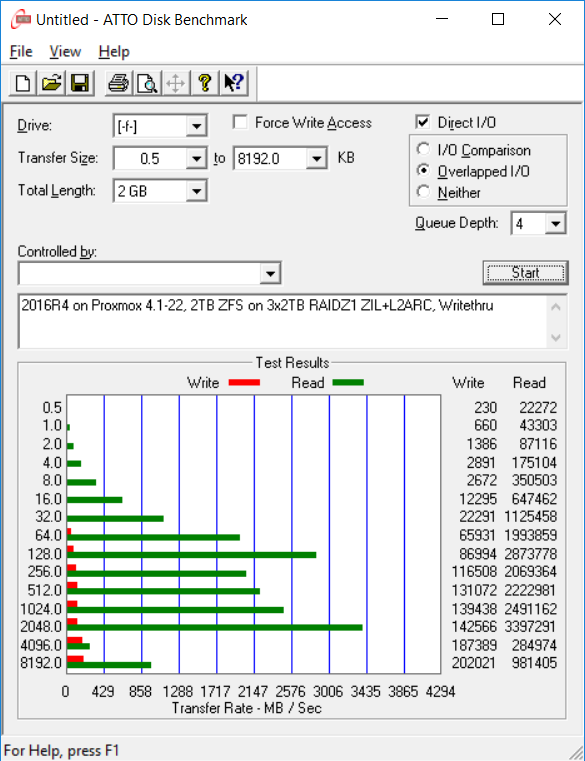

16 Apr 2016I installed Proxmox 4.1-22 on my Xeon D-1537 system to benchmark ZFS on this system. I then installed Server 2016 TP4 in KVM on this build and benched the storage within the VM using ATTO and diskspd1

- MBD-X10SDV-7TP4F Xeon D-1537

- Storage: Lenovo SA120 hooked up to the onboard LSI SAS HBA

- 3x2TB Samsung 2TB 5400RPM HD204UI spinners

- 400GB Toshiba PX02SMF040 SAS for ZIL/L2ARC

- 4x16GB SAMSUNG 16GB PC4-2133P PC4-17000R DDR4 REG-ECC DIMM M393A2G40DB0-CPB

ATTO

Diskspd

Large area random concurrent writes of 64KB blocks

diskspd -c2G -w -b64K -F8 -r -o32 -d10 -h testfile.dat

Total IO

| thread | bytes | I/Os | MB/s | I/O per s | file |

| total 8 threads: | 35203448832 | 537162 | 3242.57 | 51881.09 | 2GB |

Large area sequential concurrent writes of 64KB blocks

diskspd -c2G -w -b64K -F8 -T1b -s8b -o32 -d10 -h testfile.dat

Total IO

| thread | bytes | I/Os | MB/s | I/O per s | file |

| total 8 threads: | 54970941440 | 838790 | 3193.67 | 51098.78 | 2GB |

Large area sequential serial writes of 64KB blocks

diskspd -c2G -w -b64K -o1 -d10 -h testfile.dat

Total IO

| thread | bytes | I/Os | MB/s | I/O per s | file |

| total: | 6997934080 | 106780 | 667.37 | 10677.96 | 2GB |

Sequential large I/O Writes

diskspd -w100 -d600 -W300 -b512K -t1 -o4 -h -L -Z1M -c64G testfile.dat 2

Total IO

| thread | bytes | I/Os | MB/s | I/O per s | AvgLat | LatStdDev | file |

| total: | 41702391808 | 79541 | 66.28 | 132.57 | 30.168 | 46.099 | 64GB |

Reads

diskspd -b4K -d60 -h -o128 -t32 -si -c50G testfile.dat 3

Total IO

| thread | bytes | I/Os | MB/s | I/O per s | file |

| total 32 threads: | 13471064064 | 3288834 | 214.11 | 54813.08 | 50GB |

SQL OLTP-type workloads

diskspd -b8K -d2 -h -L -o4 -t4 -r -w20 -Z1G -c50G testfile.dat 4

Total IO

| thread | bytes | I/Os | MB/s | I/O per s | AvgLat | LatStdDev | file |

| total 4 threads: | 126582784 | 15452 | 59.91 | 7667.86 | 2.082 | 5.453 | 50GB |

Read IO

| thread | bytes | I/Os | MB/s | I/O per s | AvgLat | LatStdDev | file |

| total 4 threads: | 101990400 | 12450 | 48.27 | 6178.16 | 0.133 | 0.222 | 50GB |

Write IO

| thread | bytes | I/Os | MB/s | I/O per s | AvgLat | LatStdDev | file |

| total 4 threads: | 24592384 | 3002 | 11.64 | 1489.70 | 10.166 | 8.469 | 50GB |

Response time

| %-ile | Read (ms) | Write (ms) | Total (ms) |

| min | 0.043 | 1.919 | 0.043 |

| 25th | 0.093 | 3.876 | 0.097 |

| 50th | 0.115 | 6.803 | 0.131 |

| 75th | 0.153 | 15.196 | 0.205 |

| 90th | 0.192 | 22.814 | 6.321 |

| 95th | 0.216 | 28.896 | 15.087 |

| 99th | 0.280 | 34.100 | 28.732 |

| 3-nines | 0.882 | 35.121 | 34.550 |

| 4-nines | 12.319 | 35.213 | 35.176 |

| 5-nines | 19.003 | 35.213 | 35.213 |

| 6-nines | 19.003 | 35.213 | 35.213 |

| 7-nines | 19.003 | 35.213 | 35.213 |

| 8-nines | 19.003 | 35.213 | 35.213 |

| max | 19.003 | 35.213 | 35.213 |

Comments

- diskspd -b4K -d60 -h -o128 -t32 -si -c50G testfile.dat got me 54k read IOPS. Nice. 3

- I’m not able to test the 10GbE/SFP+ ports just yet.

- I can saturate 1Gbps SMB transfers to the storage space, but I haven’t been able to see how much faster it’ll go.

Conclusion

I’m disappointed with the write performance. As I plan to use this storage primarily for large files, the inconsistent write performance from ATTO (peaking at around 200MB/s), and the poor performance of the large sequential writes (66.28MB/s) means that I either need to add more drives, or find another solution.

I didn’t have more drives to test with, unfortunately.

-

http://www.heraflux.com/knowledge/utilities/diskspd-batch/ ↩

-

http://www.davidklee.net/2015/04/01/storage-benchmarking-with-diskspd/ ↩