Adventures with hardware RAID cards for mass storage

07 Jul 2014Lots of media files need to be stored in a centralized place at my house. The majority of my media players are running XBMC or Windows Media Center, or just VLC, so I prefer SMB file shares. This also allows me to redirect local document storage away from laptops and desktops, allowing me to run Crashplan on the server, AeroFS and sprinkle in some VSS and even some Server 2012 R2 Storage Pools in various places [1] for a nice safety net. I have old 8mm family films being digitized/edited, I have ATSC HDHomeRun recordings from XBMC and Media Center being dumped, so there’s a lot of traffic.

While I see lots of zfs-based deployments, I wanted to document my experience with some server chassis, SAS expanders and some HBAs and hardware RAID cards on Windows Server. I’m willing to devote some space to it, but it needs to run relatively quietly in my house, and I want good performance to be able to handle video playback on multiple machines at the same time.

So, given my needs/environment, what hardware works best for me?

Hardware

- RAID Controllers: LSI 9271-4i with CacheVault LSICVM01, Adaptec 6805 with AFM-600, 3ware 9750-8i with LSIiBBU07.

- HBAs: Adaptec 71605H, IBM M1015/LSI 9240-8i.

- Chassis: SuperMicro SC 846E26-R1200B rackmount 4U chassis, CSE-M35T-1B 3x5.25” to 5x3.5” hotswap, Rackable SE3016 16 drive 3Gbps hotswap JBOD w/SAS SFF-8088.

- Rack enclosure: StarTech 2636 19U

- Spinning disks: Hitachi/HGST 5K3000, 5K4000, 7K3000, 7K4000, WD Se 4TB (WD4000F9YZ), WD RE4 1TB, WD XE900 900GB SAS, Seagate 15K.7 ST3600057SS, Seagate 15K.7SED ST3600957SS, Samsung HD203WI, HD204UI

- SSDs: SanDisk Extreme II 480GB, Intel G2 160GB, Intel DC S3500 800GB, Intel DC S3700 200GB, Intel 530 180GB, Crucial M500 256GB.

- Systems/Boards: SuperMicro X10SLH-F, SuperMicro A1SRI-2758F-O, SuperMicro X9SCL+-F-O, with various Xeon processors to match.

A few random notes/comments: I have a mix of SAS and SATA disks. I haven’t tried any Areca cards to date. I stay away from Seagate’s desktop/non-enterprise drives. I use SSDs for VMs/systems that run ontop of this centralized storage, so SSD/HDD hybrid RAID1 or caching is interesting to me.

The Set Up

I used to have around 10 Samsung 2TB spinners (HD203WI, HD204UI) in JBOD. I had issues with the Antec EarthWatts power supply – the jungle of SATA power adapters would randomly loosen for 1 drive. This drove me to get a better enclosure; I also wanted slightly more redundancy beyond what I could build via replication, and dropping files on the right disk with the right amount of free space was becoming a nuisance.

I started with an Adaptec 6805 in the SuperMicro 846E26. A single SFF-8087 to the backplane, and I started loading drives in. The chassis was incredibly loud and obnoxious, so I got some acoustic foam, and also picked up the StarTech 2636 19U enclosed rack. This quieted the server from around 85dB to around 45dB, while keeping it cooled. This also let me pop my UPS inside, and rackmounted my switch.

My problems with the Adaptec 6805 started as I dropped in faster/larger drives, mixed in some SSDs, and upgraded to Server 2012 R2:

- I can no longer log into the web UI. Clean install of 2012 R2, set up my teamed NICs, and configure virtual switches for NICs for Hyper-V VMs. Install Adaptec config software and it won’t let me login: “Login Failed : Network configuration is incorrect or incomplete”. Adaptec KB is no use at all.

- When it does work, the Adaptec management software is atrocious. The old Adaptec Storage Manager v7.31.18856 was a Java app that mostly worked for configuring arrays, but it was clumsy. Critical features are hidden behind a right click context menu. When they updated to Adaptec Storage Manager (web app running on Tomcat) I ‘upgraded’ then immediately reverted back as it was basically non-functional for me. I couldn’t figure out how to delete arrays, move drives around, and create new arrays from space left on existing drives.

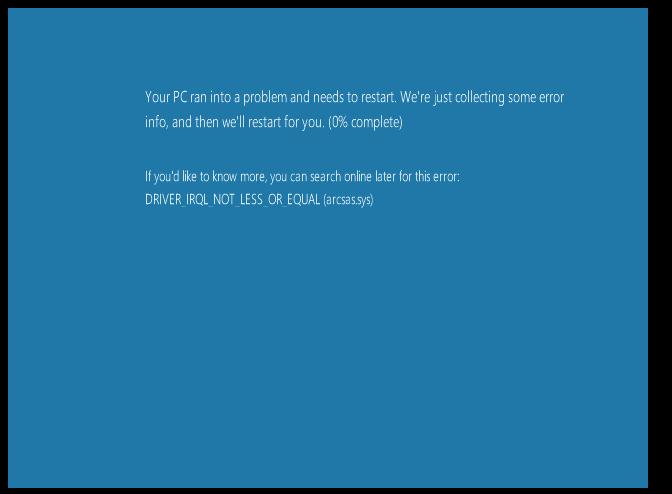

- The card started locking up. I suspected overheating issues (but really, this airport-sounding chassis was moving more than enough air) so I tried turning up the fans. I did clean installs when Adaptec support required it – but the lockups continued. I’d go and create an array and the entire machine would go out to lunch – then I’d get a kernel panic a few minutes later. “DRIVER_IRQL_NOT_LESS_OR_EQUAL (arcsas.sys)”. Adaptec tech support told me to upgrade to the latest driver, firmware, and Adaptec Storage Manager – but the issues persisted. Then they told me my card was out of support warranty – and I’d be charged $80 for an email response.

-

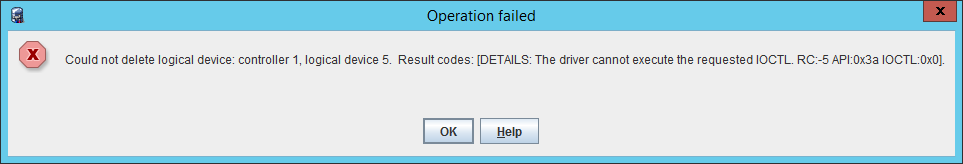

The card warns you when you mix a SAS/SATA drive (I wanted the Crucial SSD mixed with a SAS drive for redundancy – but it doesn’t stop you. Bad idea – the lockups continued, and the performance tanked. I tried to remove my SSD SATA + SAS RAID1, and the card wouldn’t let me:

- Created RAID1 with 900GB WD Xe SAS disk and Intel S3500 800GB SATA SSD then connected Intel S3700 200GB SATA SSD and created another RAID1 container with the remaining ~100GB space on the 900GB WD Xe SAS disk. I then setup 3 separate RAID5 arrays (5K3000 drives, another with 7K4000 drives, and another set with 5K4000 drives) and also connected two individual drives for a good measure (Samsung HD103UJ and HGST 5K3000 Ultrastar) as JBODs.

- Next, I tried simulated disk failure. Marked the S3700 SSD as bad – and let it rebuild. I was unable to silence the alarm in the MaxView software. Let it rebuild, then was unable to delete the R1 volume at all. ASM and MaxView both hang now. BSOD. Reboot and system is completely unresponsive.

- I re-installed Windows Server 2012 R2 on the physical server, and fired up a few VMs with a mix of virtualized VHDs running on the arrays, and two of the VMs had the JBOD disks attached as direct drives. I’m unable to do any kind of volume management:

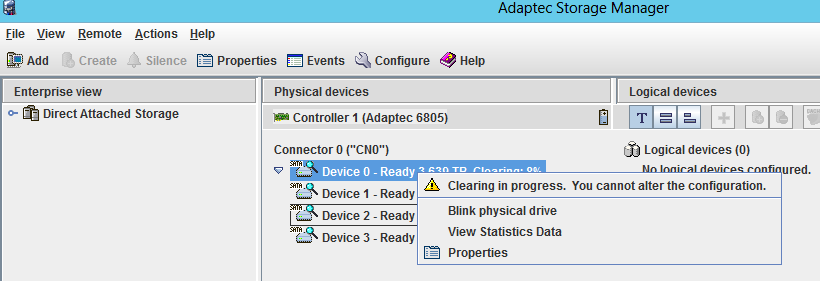

- If you kick off a clearing of disks, the adapter usually goes out to lunch for quite some time. When it does respond, it tells you it can’t cancel jobs, regularly:

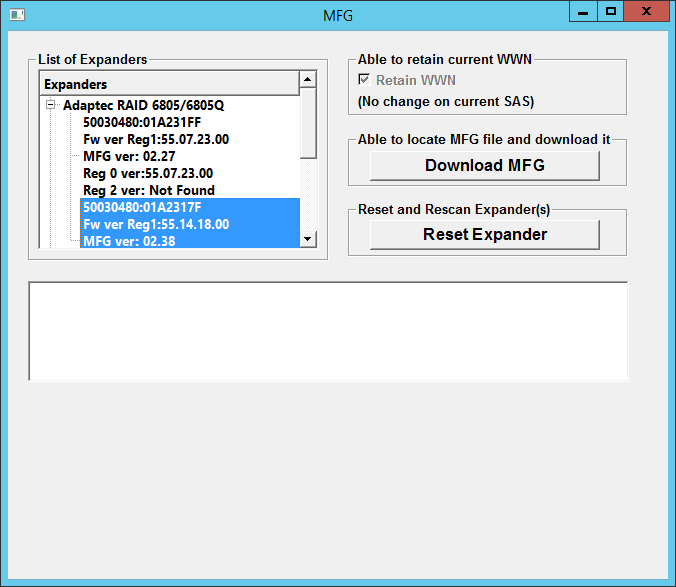

- I contacted SuperMicro for an update to the LSI backplane firmware. They sent me a sketchy Qt app that allowed me to push new firmware to my 846E26 backplane, to no avail:

- The atrocious performance continued; I found that cranking the fan up on the chassis appeared to improve performance slightly:

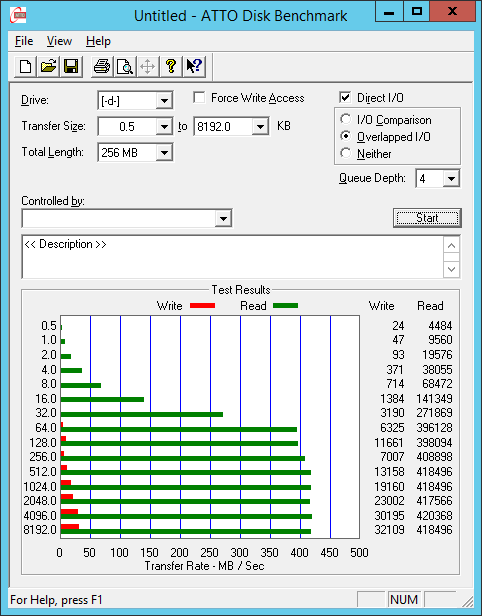

This is a 5-drive HGST 5K4000 RAID5 on Adaptec 6805 with AFM-700 on Server 2012 R2 with an ReFS partition on it. Less than 50MB/s write performance.

This is a 5-drive HGST 5K4000 RAID5 on Adaptec 6805 with AFM-700 on Server 2012 R2 with an ReFS partition on it. Less than 50MB/s write performance. - The commandline arcconf is extremely limited and clumsy. There’s no CLI – so you just issue command after command, trying to configure your arrays. Stay away, if you’ve used LSI or 3ware :)

- I ordered 3 ST3600057SS drives, and received 2 ST3600057SS and 1 ST3600957SS (SED). The Adaptec card would build a RAID5 – and once it hit 100%, would immediately be marked dirty and start building again. The SED ST3600957SS is not supported by Adaptec – don’t try.

My Configuration That Works

- Rackable SE3016 (3Gbps) with a 3ware 9750-4i4e. I can connect internal drives, and connect up my external chassis. Daisy chain as needed.

- The 3ware 3dm2 software is much nicer, responsive, simple and predictable. It’s not maintained/updated any more, sadly.

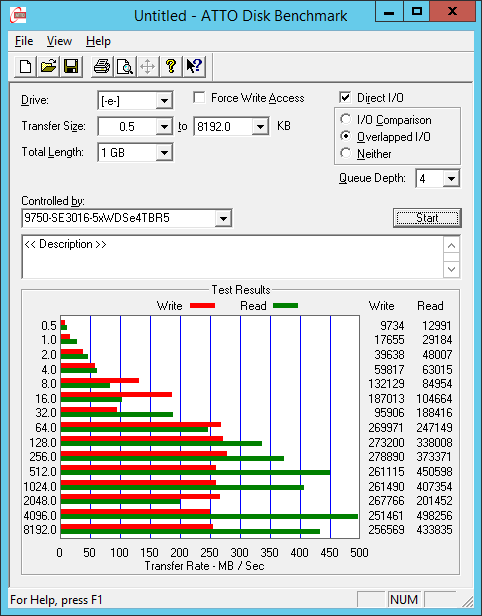

- ATTO test, unfortunately while another array was still initiing over the same SAS link:

5 drive RAID5 of WD4000F9YZ in a Rackable SE3016 enclosure controlled by a 3ware 9750. I will post some more numbers once I’m done migrating my data.

5 drive RAID5 of WD4000F9YZ in a Rackable SE3016 enclosure controlled by a 3ware 9750. I will post some more numbers once I’m done migrating my data.

[1] not all are used on the same data/location/time. Your mileage may vary.